Real-time data warehousing with Apache Spark and Delta Lake

Reading Time: 2 minutes

Financial institutions globally deal with massive data volumes that call for large-scale data warehousing and effective processing of real-time transactions. In this blog, we shall discuss the current challenges in these areas and also understand how Delta lakes go a long way in overcoming some common hurdles. We would be exploring Apache Spark architecture for this use case which comes under the purview of data engineering.

Problem Statement

Let us begin with the exploration of a use case: A Real-time transaction monitoring service for an online financial firm that deals with products such as “Pay Later and Personal Loan”. This firm needs:

- An alert mechanism to flag off fraud transactions – If a customer finds a small loophole in the underwriting rules then he can exploit the system by taking multiple PLs and online purchases through the Pay Later option which is very difficult and sometimes impossible to recover.

- Speeding up of troubleshooting and research in case of system failure or slowdown

- Tracking and evaluation of responses to Marketing campaigns, instantaneously

To achieve the above they want to build a near-real-time (NRT) data lake:

- To store ~400TB – last 2 years of historical transaction data

- Handle ~10k transaction records every 5 minutes results of various campaigns.

Note:

A typical transaction goes through multiple steps,

- Capturing the transaction details

- Encryption of the transaction information

- Routing to the payment processor

- Return of either an approval or a decline notice.

And the data lake should have a single record for each transaction and it should be the latest state.

Solution Choices: Using Data Lake Architecture

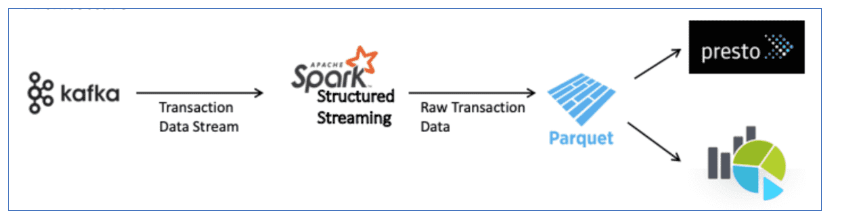

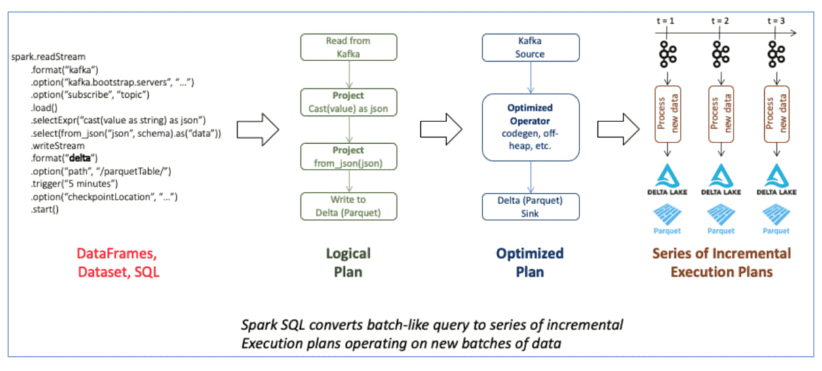

Approach 1: Create a Data Pipeline using Apache Spark – Structured Streaming (with data deduped)

A three steps process can be:

- Read the transaction data from Kafka every 5 minutes as micro-batches and store them as small parquet files

- Merge all the new files and the historical data to come up with the new dataset at a regular interval, maybe once every 3 hrs and the same can be consumed downstream through any of the querying systems like Presto, AWS Athena, Google BigQuery, etc.

- Create a Presto or Athena table to make this data available for querying.

Architecture

Challenges

- Preparing the consolidated data every 3 hours becomes challenging when the dataset size increases dramatically.

- If we increase the batch execution interval from 3 hours to more, say 6 or 12 hours then this isn’t NRT data lake,

- Any bug in the system if identified by the opportunists, can be exploited and can’t be tracked by IT teams immediately. By the time they see this on the dashboard (after 6 or 12 hours), the business would have already lost a significant amount of money.

- It’s also not very useful for monitoring specific event based campaign, e.g. 5% cashback on food delivery, on the day of “World Cup – Semi-final match”.

Approach 2: Create a Data Pipeline using Apache Spark – Structured Streaming (with duplicate data)

A two steps process can be

- Read the transaction data from Kafka every 5 minutes as micro-batches and store them as small parquet files without any data deduplication,

- Create an AWS Athena table on top of it and query the data properly,

Architecture

Challenges

Adding this additional “where” condition adds extra latency to each of the queries and it would soon become an extra overhead when the data reaches petabytes scale.

Summary

- In the 1st approach, there are 2 copies of the same data, one is the raw data and the other is the transaction data with the latest state. The raw copy of the data isn’t of any use and is also maintained in the Kafka topic.

- In the 2nd approach, we’re maintaining a single copy of the transaction base but it has duplicates. And we always have to add the filter condition of removing the stale transactions in our query.

Is there any way we can maintain only one copy of the transaction base with the latest transaction state and can provide an easy means to traverse through different snapshots?

Can we add the ACID properties to that single copy of the transaction base parquet table?

Delta Lake by Databricks addresses the above issues when used along with Apache Spark for not just Structured Streaming, but also for use with DataFrame (batch based application).

A Quick Introduction to Delta Lake

Enterprises have been spending millions of dollars getting data into data lakes with Apache Spark. The aspiration is to do Machine Learning on all that data – Recommendation Engines, Forecasting, Fraud/Risk Detection, IoT & Predictive Maintenance, Genomics, DNA Sequencing, and more. But the majority of the projects fail to see fruition due to unreliable data and data that is not ready for ML.

~60% of big data projects fail each year according to Gartner.

These include data reliability challenges with data lakes, such as:

- Failed production jobs that leave data in a corrupt state requiring tedious recovery

- Lack of schema enforcement creating inconsistent and low-quality data

- Lack of consistency making it almost impossible to mix appends and reads, batch and streaming

That’s where Delta Lake comes in. Some salient features are:

- Open format based on parquet

- Provides ACID transactions

- Apache Spark’s API

- Apache Spark’s API

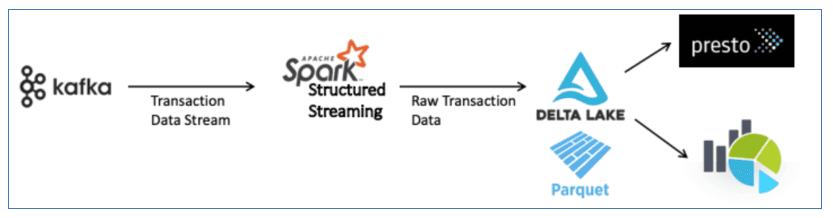

Project Architecture

A three steps process for our use case

- Create a delta table by reading historical transaction data,

- Read the transaction data from Kafka every 5 minutes as micro-batches,

- Then merge them with the existing delta table

Conclusion

The table below indicates how solutions with Data Lakes & Delta lake architecture compare with each other on different parameters and highlights the advantages that Delta Lakes have to offer.

| Data Lake Approach | Delta Lake Approach |

|---|---|

|

Writing Unsafe Data If a big ETL job fails while writing to a data lake it causes the data to be partially written or corrupted which highly affects the data quality |

ACID transactions If a big ETL job fails while writing to a data lake it causes the data to be partially written or corrupted which highly affects the data quality |

|

No consistency in data when mixing batch and streaming Developers need to write business logic separately into a streaming and batch pipeline using different technologies (creating inconsistency in code & data) Additionally, there is no possibility to have concurrent jobs reading and writing from/to the same data |

Unified batch and stream sources and sinks The same functions can be applied to both batch and streaming data and with any change in the business logic, we can guarantee that the data is consistent in both sinks. Delta also allows reading consistent data while at the same time new data is being ingested. |

|

Schema mismatch We know incoming data can change over time. In a classical Data Lake, it will likely result in data type compatibility issues, corrupted data entering your data lake, etc. |

Schema enforcement With Delta, a different schema in incoming data can be prevented from entering the table to avoid corrupting the data Schema evolution If enforcement isn’t needed, users can easily change the schema of the data to intentionally adapt to the data changing over time |

|

No data versioning Data is constantly modified so if a data scientist wants to reproduce an experiment with the same parameters from a week ago it would not be possible unless data is copied multiple times |

Time travel With Delta, users can go back to an older version of data for experiment reproduction, fixing wrong updates/deletes or other transformations that resulted in bad data, audit data, etc. |

|

Metadata management With all the different types of data stored in a data lake and the transformations applied to the data, it’s hard to keep track of what is stored in the data lake or how this data is transformed |

Metadata handling With Delta, we can use the transaction log users to see metadata about all the changes that were applied to the data |

About the Author

Sidhartha Ray is Technical Lead at Sigmoid with expertise in Big Data – Apache Spark, Structured Streaming, Kafka, AWS Cloud and Service-less architecture. He is passionate about, designing and developing large scale cloud-based data pipelines and learning & evaluating new technologies.

Featured blogs

Subscribe to get latest insights

Talk to our experts

Get the best ROI with Sigmoid’s services in data engineering and AI

Featured blogs

Talk to our experts

Get the best ROI with Sigmoid’s services in data engineering and AI