Using Nginx Ingress Controller to manage HTTP/1.1 and HTTP/2 Protocols

Reading Time: 4 minutes

Load balancers are crucial to access applications through external sources. It acts as a reverse proxy and distributes network or application traffic across servers. There are many popular types of application load balancers such as haproxy, GKE ingress controller, nginx and more. However, while using these load balancers there are several challenges regarding their configuration, performance and request type i.e (HTTP/1.1 and HTTP/2) that need to deliver data on. This needs some configuration changes while deploying controllers.

To Manage HTTP/1.1 and HTTP/2 requests, nginx ingress controller, which is a specialized load balancer for Kubernetes (and other containerized) environments provides the best option to overcome these challenges and enable the services. It has many advantages such as adapting traffic from various platforms, managing traffic within a cluster for services, monitoring and automatically updating the load‑balancing rules, and more.

While going for newer versions of load balancer thinking about version upgrade is a common practice, it may not be suitable for some of the services. In this article, we discuss how we used Ingress controller to manage HTTP/1.1 and HTTP/2 protocols. Most users today use HTTP/2 over HTTP/1.1 as it is faster and more reliable, avoids delay in multiplexing, and more. However, HTTP/1.1 has several benefits, such as being server-friendly

Why is HTTP/1.1 More Server-friendly Than HTTP/2

When we enabled HTTP/2 over HTTP/1.1, we noticed that servers behind our HTTP/2 load balancers had higher CPU load and slower response times than our other servers. On closer inspection, we realized that although the average number of requests remained the same, the actual flow of requests had become spiky. Instead of a steady flow of requests, there were short bursts of many requests. Although we had overprovisioned capacity based on previous traffic patterns, it wasn’t enough to deal with the new request spikes. Hence the responses to requests were delayed and timed out.

Benefits of Using HTTP/1.1 and Deploying Nginx Controllers for it

- HTTP/1.1 connections must be processed in series on a single connection

- HTTP/1.1 browsers effectively limit the number of concurrent requests to that origin, meaning our user’s browser throttles requests to our server and keeps our traffic smooth

- Deploying nginx controllers using Helm charts

While using helm charts to deploy services, it is common practice to go with a stable version of charts directly. But direct installation doesn’t allow for changing configurations, resulting in the need to download charts locally and then changing the configurations.

helm fetch –untar stable/nginx-ingress

After downloading it the configuration needs to be changed according to the services in question.

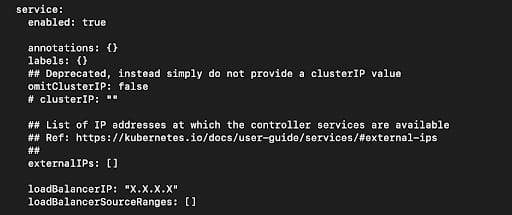

Since a static IP is maintained, there is no need to worry about external IPs every time nginx needs to be upgraded.

Below are the configuration changes to make IP static.

This needs to be changed in the values.yaml file.

- Routing request on HTTP/1.1 and HTTP/2

Consider two hosts (abc.com and xyz.com) and you want to accept abc.com as (HTTP/1.1) and xyz.com as (HTTP/2) calls and these two hosts are used for single service.

In this case, two nginx controllers need to be installed in the cluster with different names.

- Configuring Acceptance of HTTP/1.1 calls

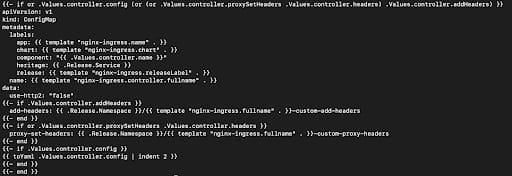

When the charts are downloaded a flag needs to be added to the file templates/controller-configmap.yaml. to make abc.com accept HTTP/1.1 calls.

In data block you need to give use-http2: “false”

After changing these configurations nginx controller with the below configuration needs to be installed.

helm install –name nginx-ingress .

After installation host and LoadBalancer IP should be mapped in cloudflare.

In case there is no need for HTTP/1.1 and static IP for the services in question then there is no need to change any configurations and direct installation is possible using the below command.

helm install –name nginx-ingress stable/nginx-ingress

Above configuration changes are to make IP static.

- If one wants to change protocols for other services, it is essential to deploy another nginx controller in the cluster, because enabled protocols are by default applied to all external hosts.

- Also the protocols will not be changed using annotations in the service. It will only change by changing the configurations of the respective files of nginx.

- Every time configuration changes are needed in nginx one can upgrade it without deleting the previous versions.

About the Author

Dakshraj Goyal is DevOps Engineer at Sigmoid. He loves to explore new technologies which lights up and boosts the DevOps culture. In his free time he loves to play cricket and watch the NBA.

Featured blogs

Subscribe to get latest insights

Talk to our experts

Get the best ROI with Sigmoid’s services in data engineering and AI

Featured blogs

Talk to our experts

Get the best ROI with Sigmoid’s services in data engineering and AI