Modernizing legacy architectures using GenAI-powered Knowledge Graphs

Reading Time: 5 minutes

A decade ago, enterprises started migrating their data platforms and associated applications to the cloud, but most stopped at lift-and-shift, with legacy systems just rehosted and not reengineered. Today, these data platforms such as legacy data warehouses or data lakes are complex, costly, and unfit for recent AI-native business demands.

Legacy platforms often require massive manual effort to restructure and optimize. However, According to McKinsey, GenAI tools have enabled software engineers to complete coding tasks up to twice as fast, reducing the time needed for documentation and pipeline development by 50%. GenAI is helping businesses unlock value from data, accelerate insight, and compete at the speed of innovation.

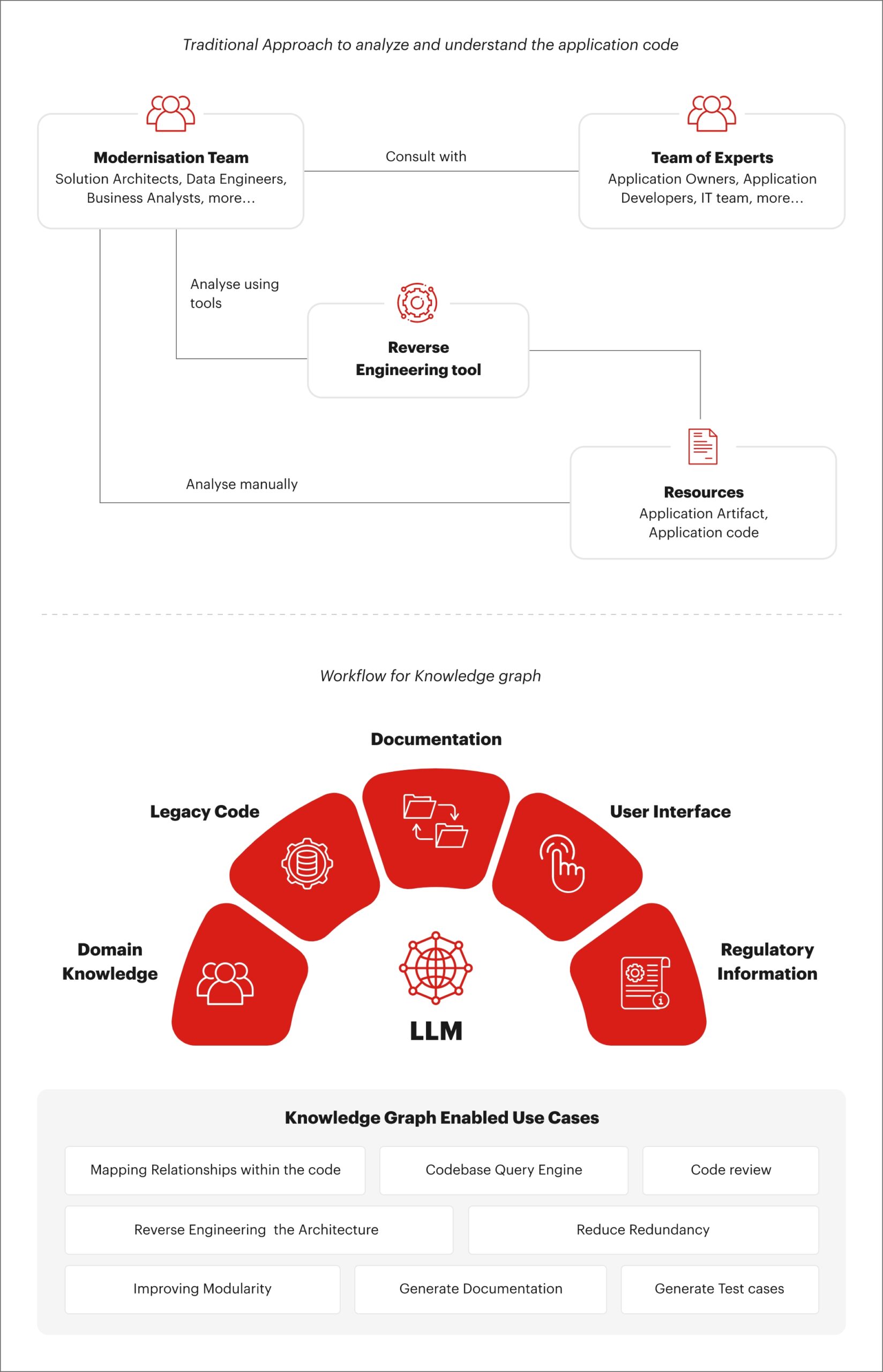

Why traditional modernization falls short

Most “lift and shift” migrations rehost legacy systems without re-architecting them, creating platforms that are structurally unfit for modern AI workloads. In the Agentic AI era, where systems must reason, act autonomously, and iterate in real-time, these rigid architectures become bottlenecks. The deeper issues often lie in fragmented processes, undocumented logic, siloed teams, and deep entanglement with critical business workflows. This leads to unclear ROI, stalled adoption, and a widening gap between data capabilities and business outcomes.

Engineering teams face recurring questions:

- How can we understand legacy systems when documentation is missing and domain knowledge is lost?

- Can we preserve business logic while translating code across tech stacks?

- How do we minimize disruption while ensuring the new system maintains functional parity and delivers the same outcomes?

These challenges call for a smarter approach, one that’s automated, insight-driven, and GenAI-powered.

Enabling structural visibility with Knowledge Graphs

Modernizing legacy systems or building AI copilots for developers requires deep visibility into how applications are architected, not just line-by-line, but at the system level. One of the most effective ways to enable this visibility is by converting the codebase into a knowledge graph that exposes entities, relationships, and behavioral semantics. Let’s explore how GenAI transforms legacy systems through a knowledge-graph-driven approach.

Fig.1. Illustrative: Traditional vs Knowledge Graph-based Code Transformation Approach

From Codebase to Knowledge Graph

GenAI-assisted approach to legacy migration is powered by advanced technologies such as context engines, prompt engineering, foundation models, and self-validation loops. Here’s how that transformation happens in practice:

- Parsing the Codebase: Structuring unstructured code

The process begins by systematically parsing the application code using Abstract Syntax Tree (AST) tools or language-specific parsers. These tools break down source files into structured representations of programming constructs to capture files, classes, functions, variables, and their scopes.

- Generating semantic summaries with LLMs

Once the code is parsed into discrete entities, we can apply LLMs (large language models) to generate summaries that contextualize each component, describing what it does, how it connects to other components, and what its role is in the broader system.

- Building the Knowledge Graph: Encoding application logic as relationships

The final step is to store both the parsed structure and the semantic metadata into a graph database. This database serves as a dynamic representation of the application codebase, where nodes represent code entities and edges model relationships such as:

- Calls, inherits, depends_on, defined_in, and more

- Ownership, such as which developer owns which module

- Runtime insights, such as caller-callee traces, when call graph integration is available

The knowledge graph becomes a living index of the system, one that supports modernization and technical debt analysis.

How sigmoid drives modernization success

Sigmoid partners with enterprises to translate the promise of GenAI into measurable modernization outcomes through a phased, value-driven approach. Our solutions offer a structured journey that minimizes risk, delivers early wins, and scales with business priorities.

Case Study

| Problem | Solution | Impact |

|---|---|---|

| A leading MedTech company relied on manual processing of unstructured lab data (PDFs like drawings, labels, and MICs), causing bottlenecks, errors, and inefficiencies. They also lacked the standardization and automation needed for a smooth Laboratory Information Management System (LIMS) migration. | Sigmoid implemented a two-phase GenAI solution:

|

|

Conclusion

Modernization is a critical step for enterprises looking to stay competitive in an AI-driven landscape. But success depends on more than just moving systems to the cloud—it requires rethinking how legacy platforms are understood, transformed, and integrated into today’s data ecosystem. GenAI brings the tools and intelligence needed to decode complex systems, automate transformation, and retain critical business logic along the way. With the right partner and a value-driven approach, organizations can move confidently toward a future where data platforms are efficient, scalable, and ready for innovation.

About the author

Shruti is a Presales Consultant at Sigmoid with over 11 years of experience in the software industry. With a strong background in the retail and CPG domains, she specializes in crafting point-of-view documents and tailored proposals for clients exploring modern data platforms to enable advanced data and analytics use cases.

Featured blogs

Subscribe to get latest insights

Talk to our experts

Get the best ROI with Sigmoid’s services in data engineering and AI

Featured blogs

Subscribe to get latest insights

Featured blogs

Talk to our experts

Get the best ROI with Sigmoid’s services in data engineering and AI