Top 5 model training and validation challenges that can be addressed with MLOps

Reading Time: 5 minutes

Digitalization turned from being an advantage into a necessity for organizations across the industries in the last couple of years. As the world gets increasingly connected, the data being generated is also becoming more and more critical for decision-making, both strategic and operational. Parsing colossal amounts of data, analyzing it, and deriving operational and strategic insights from it requires revolutionary methodologies. ML is one of the latest and most promising of such technological advancements.

That said, ML is not like software, which can be coded and deployed to yield intended results. An ML model comprises coding that is meant to learn from data and take decisions and make predictions based on it. Whether the ML model will function optimally and make increasingly more accurate predictions and appropriate decisions depends upon how well it has been trained and validated.

Without rigorous training and validation, an ML model is as good as an engine without fuel. However, training an ML model is tricky, to say the least. This is because it squarely depends upon the quality and richness of training and validation data. That said, post deployment, an ML model can receive all types of data from all types of sources. Processing those data and arriving at an appropriate conclusion is only possible for an ML algorithm if it has been exhaustively trained and validated. Lest, the post-deployment diversity and volume of data will result in unwanted learning, rendering the model ineffective despite the in-lab success.

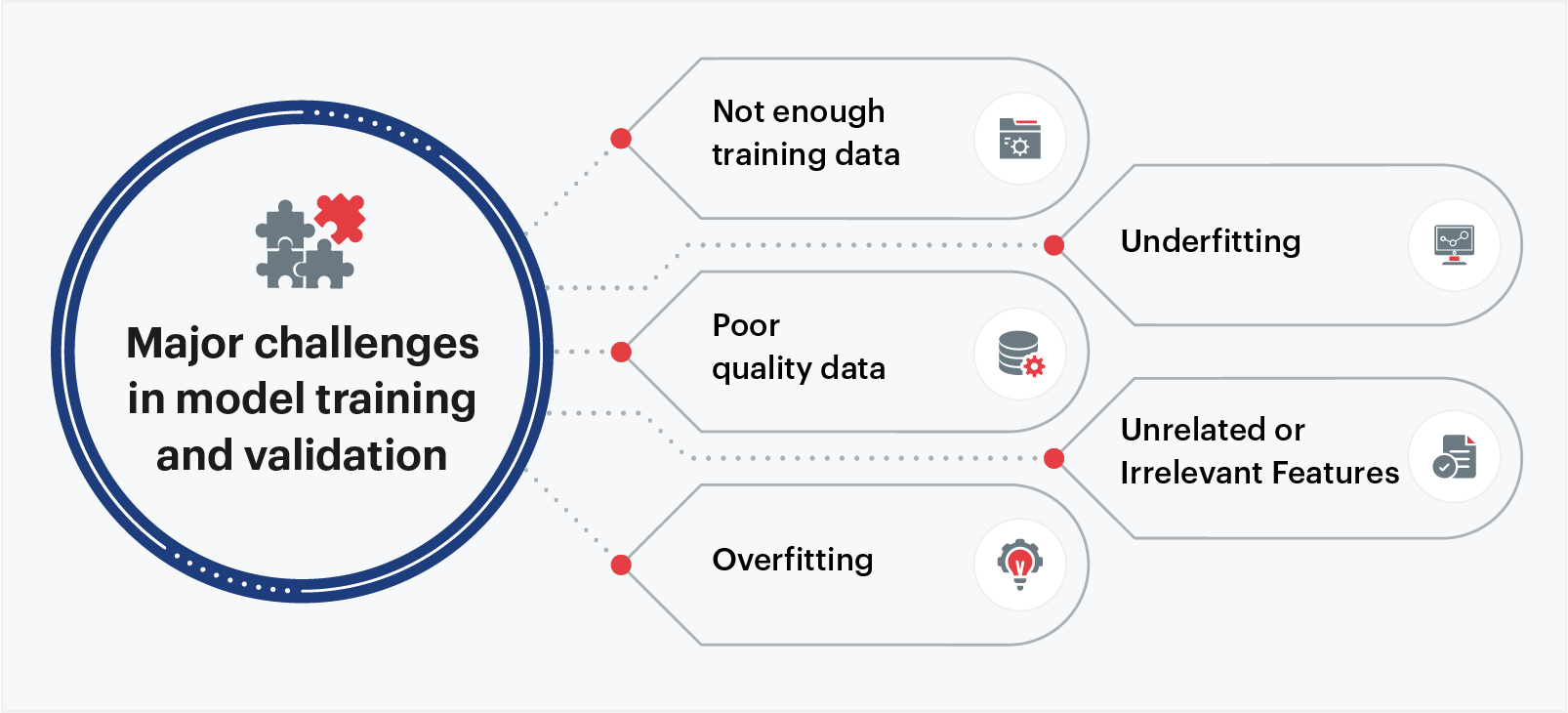

Major Challenges in Model Training and Validation

Replicating the real-life data supply for model training and validation is understandably very difficult. Understanding such challenges pertaining to the training and validation of an ML model is essential to addressing them and leveraging the technology to the fullest. Below are some of the most common challenges in training and validation of an ML model:

- Not enough training data: An ML model at the training stage is less intelligent than even a normal infant or toddler. It requires a huge number of examples of each element to be able to identify it accurately after deployment. The more diverse the data set fed to the model for each element, the higher the accuracy of its predictions would be. In other words, ML model training is a data-hungry process for which the data is never enough.

- Poor quality data: The ML model and infant comparison also explain the significance of the quality of data in model training and validation. If there’s a huge amount of data available but it’s a mix of relevant, irrelevant, and partially relevant data, the model will have tremendous difficulty in learning from such data. The more parsed the training data would be, the higher the probability of accurate predictions by the model. Similarly, if the training data does not represent new cases, the model will not be able to generalize well and its predictions will be biased.

- Overfitting: When an ML model is trained with biased data or is too complex training data, it leads to overfitting. Overfitting negatively affects the performance of the model after deployment despite being trained with huge amounts of data. For instance, using neural networks to create a model that can be solved using a linear regression model, or if the model is being trained with high epochs, the training accuracy is good training but it fails to generalize to validation/test data set.

- Underfitting: When an ML model is trained with such data that causes the model to establish an ambiguous relationship between input and output variables, it leads to underfitting. It also occurs when the algorithm is too simple to learn from the training data. For instance, using a linear ML model on a multi-collinear set.

- Unrelated Features: There may be instances when the training data could have a large number of irrelevant features compared to the relevant ones. In such cases, the ML system may not produce the desired results. Selecting appropriate features to train the model, also referred to as ‘Feature Selection’, can play a decisive role in defining the success of any ML project.

MLOps for Seamless Model Training and Deployment

Organizations today are looking at realizing tangible results of their ML projects. However, the challenges stated above make it evident that propelling an ML venture to production is no mean feat. That is why best practices to eliminate the hassles of developing an ML model and expedite its deployment have been identified and defined. These best practices are referred to as MLOps.

MLOps enables rapid, continuous production of ML applications at scale. It addresses the unique ML requirements and defines a new life cycle parallel to SDLC and CI/CD protocols. This makes an ML model more effective and its workflow more efficient. MLOps brings together the capabilities of data scientists, DevOps engineers, product developers, data engineers, and IT professionals, who work in tandem to develop, deploy and operate an ML model successfully and quickly. It comprises the following components that ensure maximum model performance and ROI:

- Model serving and pipelining

- Model service cataloging for in-production models

- Data and model versioning

- Monitoring

- Governance

- Infrastructure management

- Security

ML focuses on making machines increasingly intelligent in their specific tasks by harnessing the power of data. The technology is rapidly gaining traction in the fields of video surveillance, speech recognition, robotic training, medical diagnosis, product recommendations, and more. However, the effectiveness and efficiency of an ML model depend upon how well it has been trained and validated. This is where MLOps becomes imperative.

Without the iterative, agile best practices of MLOps, successful training, validation, and deployment of ML models, and deriving desired results from it, is extremely difficult. That only 22% of organizations have been successful in deploying an ML model in the last couple of years, despite increasing the investment, aptly highlights that it’s easier said than done. Strategic adoption of MLOps, especially when implemented by experts in the business, is the key to addressing the challenges that hinder the timely deployment of ML models.

About the Author

Fortythree is an engineer on the surface but a scientist at heart. He likes to be challenged and tackle difficult problems through trial and error. He has several years of experience in designing and building ML/ETL pipelines using various cloud technologies.

Featured blogs

Subscribe to get latest insights

Talk to our experts

Get the best ROI with Sigmoid’s services in data engineering and AI

Featured blogs

Talk to our experts

Get the best ROI with Sigmoid’s services in data engineering and AI