Demystifying the evolution of specialized GPT models: InstructGPT and ChatGPT

Reading Time: 10 minutes

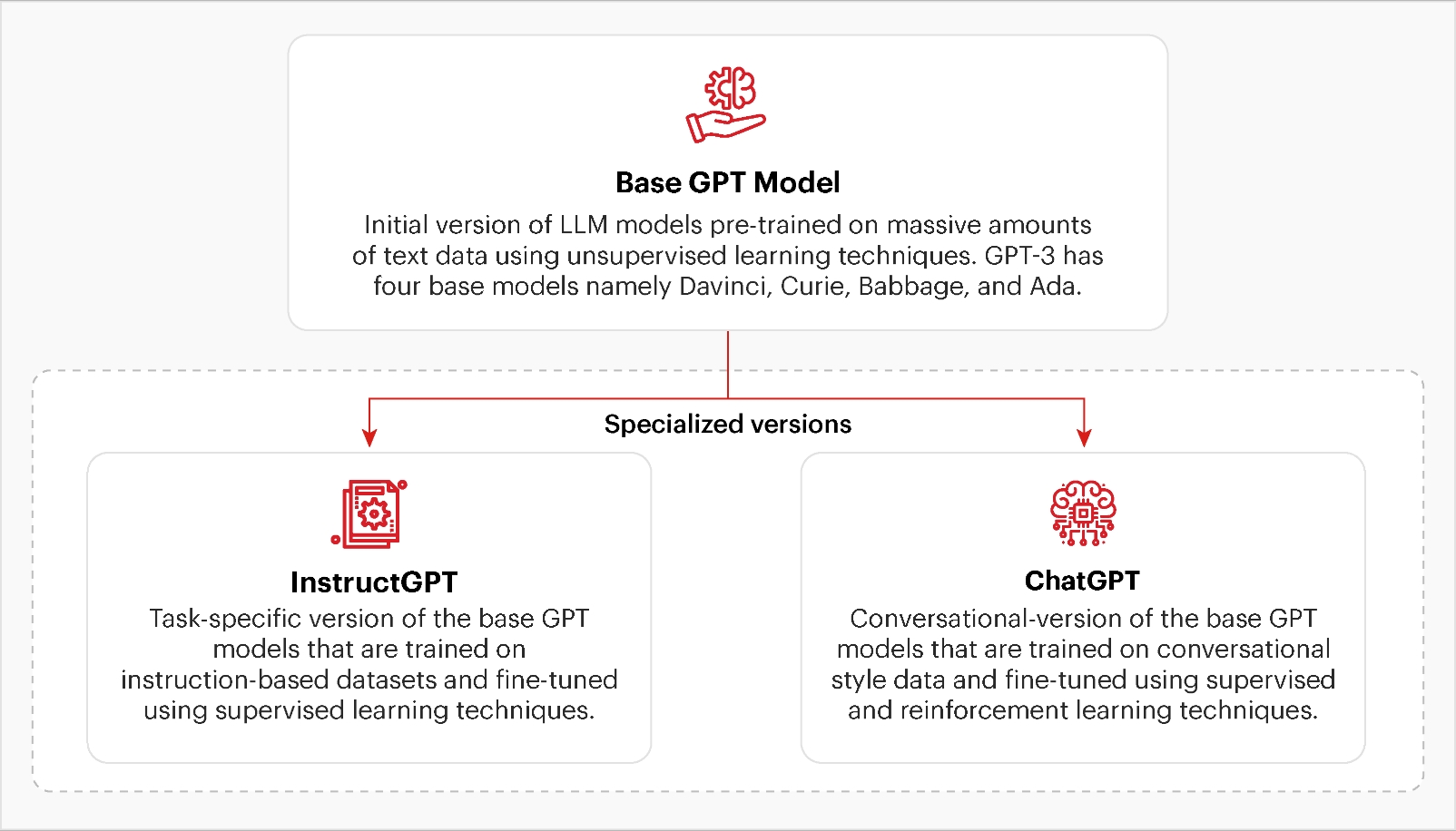

In our previous blog, we talked about the BaseGPT models and their evolution. In this blog, we will look at the specialized versions of GPT (Generative Pre-trained Transformer) models. These versions are created from Base models by using task specific fine-tuning. There are two major specialized versions of GPT models namely InstructGPT and ChatGPT.

GPT-3 base models

Base models are large-scale language models that are pre-trained on massive amounts of text data using unsupervised learning techniques. These models form the foundational versions of the GPT series for more advanced iterations. These models are based on the Transformer architecture, which is a deep neural network architecture designed to process sequential data, such as text.

The base models are trained on large and diverse corpus of text data, such as books, articles, and web pages, using an unsupervised learning approach called “masked language modeling”. This involves masking out some of the words in a sentence and training the model to predict the masked words based on the surrounding context. The model learns to predict the next word in a sentence based on the context provided by the preceding words. This unsupervised pre-training process allows the model to develop a deep understanding of language structure, syntax, and semantics.

Following pre-training, the base models can fine-tune for specific tasks like sentiment analysis, text completion, and question-answering using labeled data. Fine-tuning refines the model’s skills, enhancing specialization and accuracy.

InstructGPT

InstructGPT, an extension of OpenAI’s GPT-3 model, excels as a unique language model adept at following instructions and completing diverse tasks. It trains on extensive datasets of instructions and tasks, swiftly grasping directives for efficient execution. The core purpose of InstructGPT is automating repetitive tasks for businesses. For instance, users can prompt in simple tasks like “compose a blog post on benefits of using InstructGPT” or “create a presentation on latest AI trends”, which are easily accomplished.

InstructGPT’s capabilities include data entry, cleaning, summarization, and more. Users can entrust InstructGPT with diverse tasks like “extracting contact information from a list of customers” or “summarizing a research paper”. Notably, InstructGPT shines in its adaptability to custom datasets, allowing training with specific instructions and tasks tailored to unique requirements.

ChatGPT

ChatGPT is a large language model chatbot developed by OpenAI. It utilizes GPT-3.5 and GPT-4 foundational LLMs, and fine-tuned via supervised and reinforcement learning techniques. ChatGPT can engage in seamless dialogues with humans, comprehending their intent and delivering informative, engaging responses. It has emerged to be an invaluable tool for a wide array of businesses with its ability to perform diverse tasks ranging from customer service or education to marketing and more.

Creation of InstructGPT and ChatGPT models

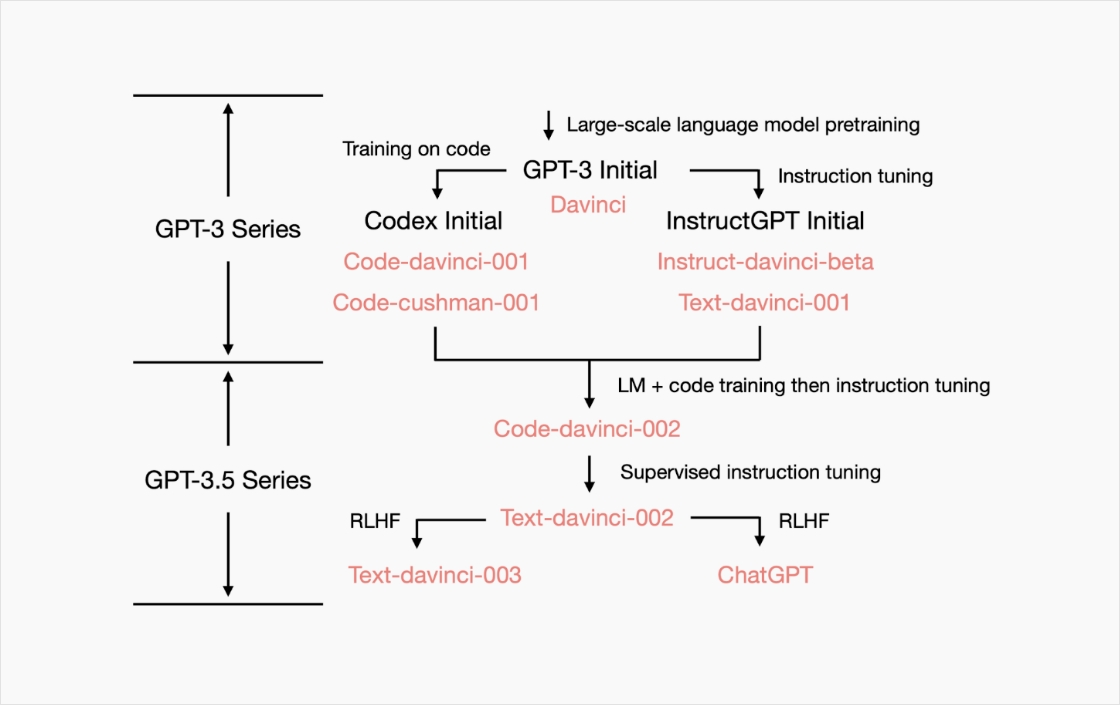

GPT-3 has 4 base versions out of which Davinci is the most powerful. The Davinci version is used to create Codex Initial and InstructGPT initial.

Source: University of Edinburgh, Allen Institute for AI

Step 1– Codex Initial is a language model that is specifically designed to understand and generate code. Trained on a vast amount of publicly available code on the internet code, it excels in language understanding and snippet generation. Codex-initial has two variants: Code-davinci-001 and code-cushman-001. The initial phase involves pre-training on a large corpus of code to comprehend syntax, semantics, and patterns. Post pre-training, Codex undergoes a fine-tuning process with a curated dataset that includes demonstrations and comparisons of code snippets. This process aligns the code generation capabilities of the code with specific programming tasks, thus ensuring accuracy and reliability.

Step 1.1– InstructGPT Initial model is created by fine-tuning the GPT-3 base model. It has two variants namely Instruct Davinci beta and text Davinci-001. The base language model is fine-tuned using a specialized dataset specifically curated for natural language programming (NLP) tasks. This dataset maps programming instructions with code examples. The model learns to generate code aligned with instructions, refining its ability for code snippets. By fine-tuning the pre-trained base model on a tailored NLP dataset, InstructGPT is able to better understand and generate code from human-like instructions.

Step 2– Code-Davinci-002 results from combining InstructGPT initial and Codex initial models. This synergy forms a powerful model, tailored to programming tasks that can produce high-quality code outputs.

Step 3– Text-Davinci-002is an InstructGPT model. To create Text-Davinci-002, OpenAI fine-tuned pre-trained Code-Davinci-002 with non-programming text, such as news articles, books, and other general text. It becomes an InstructGPT model.

Step 4– Text-davinci-003 emerges from text-davinci-002 via reinforcement learning with human feedback. It undergoes extended training on a larger text and code dataset, featuring recent data as well as diverse, and complex examples. The model is fine-tuned to become more specific and concise in its responses. [Text-davinci-001,code-davinci-002 ,Text-davinci-002, Text-davinci-003 all are InstructGPT models, all are available for use through API except code-davinci-002].

Step 5– ChatGPT model was created by employing reinforcement learning with human feedback on the pre-trained Text-Davinci-002 model. It was then fine-tuned with a large conversational dataset, such as online chat logs, customer support conversations, and social media interactions.

Comparative overview of GPT-3 Base, InstructGPT and ChatGPT models

| Characteristics | GPT-3 Base model (Language generation) | InstructGPT model (Instruction following) | ChatGPT model (Interactive conversations) |

|---|---|---|---|

| Overview | Has knowledge of general features and patterns of the language it is trained on. | Enhanced for instruction-based tasks | Optimized for conversational AI |

| Usability | General-purpose language model | Follows instructions and generates task-oriented responses | Engaging in interactive conversations |

| Training data | Diverse corpus from the internet | InstructGPT dataset with demonstrations and instructions | Wide range of internet data including conversational data |

| Knowledge cutoff | September 2021 | September 2021 | September 2021 |

| Token limit | 4096 tokens | 4096 tokens | Varies (up to 4096 tokens) |

| Instruction understanding | Not explicitly designed for instructions | Enhanced to understand and follow instructions | Enhanced to understand and respond to conversational context |

| Examples of tasks & use-cases *suggestive examples aligned with the capabilities of each variant |

Content generation, question answering, language translation, etc. | Instructional and guided writing, programming assistance, report generation, recipes, code generation, etc. | Interactive applications, automated response generation, customer support, website query chatbots, quick task menu option etc. |

| Strengths | Model has access to a large knowledge base of information and can be used for broad applications. It can generate diverse and creative responses that are well-suited for various language tasks, such as translation or summarization. |

Model understands and follows instructions better. It generates more accurate, task-specific responses. It is guided for programming or procedural tasks. |

Model optimized for interactive, conversational use. It offers human-like conversational experience. |

| Weaknesses | BaseGPT output lacks instruction-specific context. It has limited ability to understand and follow specific instructions and may produce incorrect or nonsensical answers due to lack of instruction-specific guidance. |

InstructGPT output can deviate from instructions. The model may struggle with complex/ambiguous prompts. It has limited domain-specific pre-training. It incurs slightly higher cost than the Base model. |

ChatGPT may lack context in responses. The model struggles to maintain context in extended conversations. There is a potential for biased or controversial output from training. It still has limited understanding of nuanced or complex topics. |

GPT-3 Base, InstructGPT and ChatGPT models in action: Outputs generated for different tasks

The table below contains a few randomly selected tweets related to Samsung Galaxy F04 smartphones. For these tweets we will use base, InstructGPT and ChatGPT models to perform some tasks.

| Samsung Galaxy F04 Smartphone Launch, Will Be Available With 8 GB RAM For Rs 7499 |

Samsung Galaxy F04 with MediaTek Helio P35, up to 8GB RAM launched in India: price, specifications, availability |

| #Samsung Galaxy F04 finally launched and will be a direct competition to #POCO C50 | Samsung Galaxy F04 budget smartphone goes official in India |

| Samsung Galaxy F04 launches in India, special introductory price announced at Rs. 7499 | Samsung Galaxy F04 with 6.5-inch HD+ Infinity-V display, MediaTek Helio P35 SoC launched in India |

| Presenting the all-new Samsung Galaxy F04, a budget smartphone that’s #F4Fast. Powered by 8GB RAM with RAM Plus, the Galaxy F04 sports a stylish gloss design. Sale starts 12th Jan, 12 noon, at just Rs. 7499, on Flipkart and #SamsungGalaxyF04 | Samsung Galaxy F04 at 7499 with 8 GB expandable RAM, 5000 mAh Battery & 2 major OS updates. Definitely, impressive & phone under 10,000 competition is back Sale on 12 Jan #Samsung #GalaxyF04 #SamsungGalaxyF04 |

| The Samsung Galaxy F04 is presented. The phone is based on the MediaTek Helio P35 SoC and runs Android 12 with a proprietary OneUI interface. The phone received a PLS screen with a diagonal of 6.5 inches, a resolution of 1600 x 720 pixels and a frame rate of 60 Hz. | The Samsung Galaxy F04 is here with little in the way of surprises. It’s clad in Jade Purple and Opal Green. Inside, there’s a MediaTek Helio P35 12nm chipset paired with 4GB of RAM. You get 64GB of base storage that can go up to 1TB through the microSD card |

| Samsung Galaxy F04 with MediaTek Helio P35, up to 8GB RAM launched in India priced at Rs 7,499. Samsung Galaxy F04 specifications include 6.5-inch HD+ display MediaTek Helio P35 SoC 5,000mAh battery 13MP dual rear cameras 5MP selfie snapper |

The Samsung Galaxy F04 has become official in India! Take a look at the main features: 13MP primary camera 5MP selfie camera MediaTek Helio P35 6.5″ HD+ 60Hz IPS LCD display Fast charging 15W & 5000mAh battery. #Samsung #GalaxyF04 |

Task 1– Summarizing tweets

| Prompt used | GPT-3 Base output | InstructGPT output | ChatGPT output |

|---|---|---|---|

| Summarize the tweets | Garbage output (python code) | Samsung has launched the Galaxy F04 in India with a MediaTek Helio P35, up to 8GB RAM, 6.5-inch HD+ Infinity-V display, 13MP dual rear cameras, 5MP selfie snapper, 5,000mAh battery, and Android 12 with OneUI interface. It is priced at Rs 7,499 and will be available from January ‘23 | The Samsung Galaxy F04 has been launched in India at a price below Rs 8,000. It features a MediaTek Helio P35 chipset and up to 8GB of RAM. The smartphone will be available with 8GB RAM for a price of Rs 7,499. Sales of the device will start on January 12. The Galaxy F04 is positioned as a budget smartphone and will compete directly with the POCO C50. It sports a 6.5-inch HD+ Infinity-V display and is powered by a 5,000mAh battery. The phone also comes with a 13MP dual rear camera setup and a 5MP selfie camera. It runs on Android 12 with Samsung’s OneUI interface. |

Analysis: The table above shows the GPT-3 base model fails to summarize the tweets, while InstructGPT and ChatGPT models perform better. The summary generated by the InstructGPT model is informative and precise, but the ChatGPT summary is more elaborate. For example, ChatGPT model also mentions competitor smartphones—a detail absent in InstructGPT’s summary. Both models provide strong summaries, with ChatGPT having a slight edge.

Task 2– Querying/Answering questions

| Prompts used | GPT-3 Base output | InstructGPT output | ChatGPT output |

|---|---|---|---|

| Which device is being talked about in the given sentences? | Garbage output (python code, importing random libraries) |

The device being talked about in these sentences is the Samsung Galaxy F04 smartphone. | The device being talked about in these sentences is the Samsung Galaxy F04 smartphone |

| What is the name of the competitor product of Samsung F04? | *Garbage output* #Samsung Galaxy F04 finally launched and will be a direct competition to #POCO C50. Presenting the all-new Samsung Galaxy F04, a budget smartphone that’s #F4Fast. Powered by 8GB RAM with RAM Plus, the Galaxy F04 sports a stylish gloss design. Sale starts 12th Jan, 12 noon, at just Rs. 7499, on Flipkart and #SamsungGalaxyF04 |

The competitor product of Samsung F04 is POCO C50. | ChatGPT: The competitor product of Samsung Galaxy F04 could be the POCO C50. |

| Can you specify the camera details of the smartphone? | *Garbage output* The Samsung Galaxy F04 has become official in India! Take a look at the main features: 13MP primary camera‚ 5MP selfie camera‚ MediaTek Helio P35‚ 6.5″ HD+ 60Hz IPS LCD display‚ Fast charging 15W & 5000mAh battery. #Samsung #GalaxyF04 Samsung Galaxy F04 budget smartphone goes official in India Samsung Galaxy F04 with 6.5-inch HD+ Infinity-V display, MediaTek Helio P35 SoC launched in India |

Camera Details: 13MP dual rear cameras, 5MP selfie snapper |

Based on the provided sentences, here are the camera details for the Samsung Galaxy F04: – 13MP primary camera – 5MP selfie camera – Dual rear cameras (13MP) – Selfie snapper (5MP) |

Analysis: In this task, the BaseGPT model falls short in answering all questions, whereas both InstructGPT and ChatGPT models provide accurate answers to every question.

Task 3– Topic Creation

| Prompt used | GPT-3 Base output | InstructGPT output | ChatGPT output |

|---|---|---|---|

| Create short topics/titles based on the smartphone features | Garbage output (python code) | 1. Samsung Galaxy F04 launched in India 2. Samsung Galaxy F04 with MediaTek Helio P35 3. Samsung Galaxy F04 Price in India 4. Samsung Galaxy F04 vs POCO C50 5. Samsung Galaxy F04 budget smartphone 6. Samsung Galaxy F04 introductory price 7. Samsung Galaxy F04 specs 8. Samsung Galaxy F04 features |

1. MediaTek Helio P35 chipset 2. Up to 8GB RAM 3. 6.5-inch HD+ display 4. 13MP dual rear cameras 5. 5MP selfie camera 6. 5000mAh battery 7. Fast charging (15W) 8. Expandable storage (microSD) 9. Android 12 with OneUI interface 10. Flipkart availability 11. Design and color options 12. Sub-10,000 price range 13. IPS LCD display with 60Hz refresh rate 14. User-friendly interface (OneUI) 15. Impressive battery life |

Analysis: The table above illustrates that the Base model struggles to generate topics, whereas the InstructGPT and ChatGPT models perform the task better. The ChatGPT model produces a greater number of detailed topics whereas the InstructGPT model generates more high-level topics.

Note– GPT models are not deterministic in nature, leading to slight variation in output each time for the same prompt.

Limitations of GPT models

- Limited Memory/Max tokens: Limited Context/Max Tokens: GPT models have a finite context window, potentially losing information from long texts (retaining context up to 4096 tokens).

- Rate limits: Rate limits, in the context of GPT models, refer to the constraints placed on the number of requests or the amount of computational resources that can be used within a specific time period. There are two types of rate limits while using GPT models –

- Requests per minute: Limited API requests per minute, can be increased with paid API. But these are still limited in the cases where a high number of requests are to be processed.

- Tokens per minute: Limited tokens passed to API per minute, can be increased with paid API. But these are still limited in the cases where a huge volume of data is to be processed.

- Lack of Reasoning: While GPT models excel in generating human-like text, but may struggle with common-sense reasoning, sometimes providing plausible yet unrealistic answers.

- Safety and Ethical Concerns: GPT models may generate harmful, misleading, or malicious content if misused, requiring careful handling, especially in public applications.

- Biases in Responses: GPT models might reflect biases present in the training data, yielding politically biased, offensive, or discriminatory responses. Efforts are needed to mitigate these biases, but they may still exist to some extent.

Conclusion

In general, specialized fine-tuned GPT versions outperform base models across tasks due to continuous training on quality datasets, enhancing their contextual understanding. InstructGPT and ChatGPT models perform similarly, with the ChatGPT model slightly edging ahead. This is likely due to its ability to generate outputs in conversational format. Notably, as prompts become more detailed, the outputs of both the models tend to become more similar in nature.

About the authors:

- Bhaskar Ammu is a Senior Lead Data Scientist at Sigmoid. He specializes in designing data science solutions for clients, building database architectures, and managing projects and teams.

- Ankit Mehra is a Senior Data Scientist at Sigmoid. He specializes in analytics and ML-based data solutions.

- Malhar Yadav is an Associate Data Scientist at Sigmoid and a coding and ML enthusiast.

Featured blogs

Subscribe to get latest insights

Talk to our experts

Get the best ROI with Sigmoid’s services in data engineering and AI

Featured blogs

Talk to our experts

Get the best ROI with Sigmoid’s services in data engineering and AI