9 things you need to know about microservices

Reading Time: 7 minutes

The rise of microservices

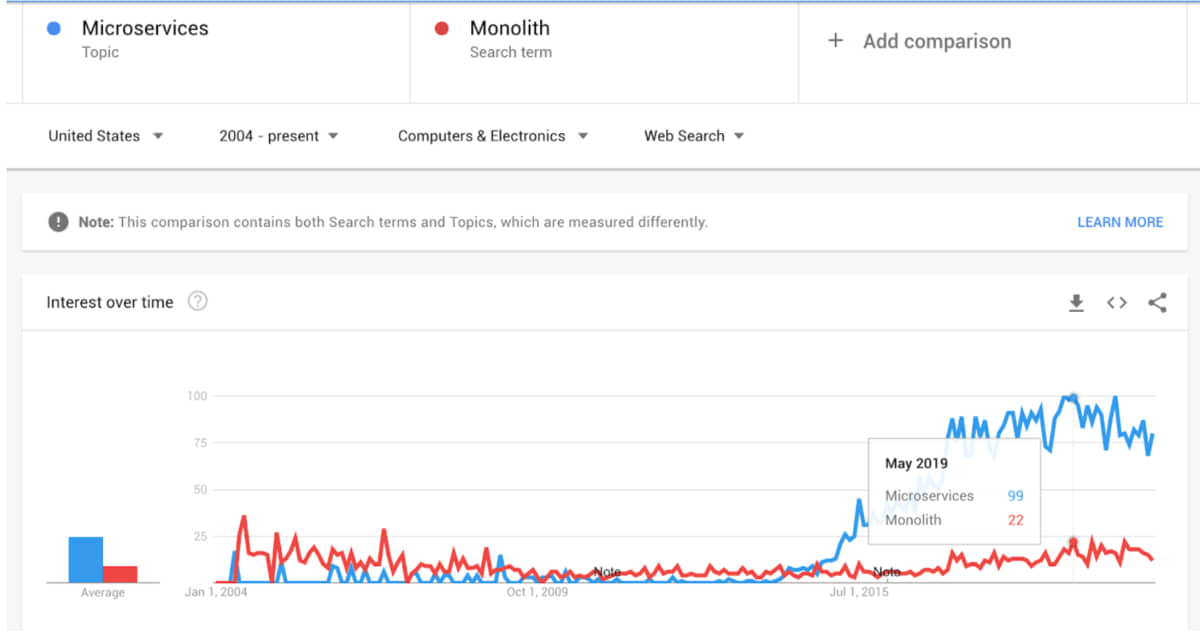

The chart below clearly shows that microservices have been trending since 2015.

This is because the arrival of docker and kubernetes around 2015 almost nullified the effort and resources required for the scaling and deployment of microservices, which was otherwise arduous because of the lengthy deployment times.

What is a Microservice?

Someone coming from a monolith background might say “we have a module and they separated it out and called it microservices. What difference does it make other than the overhead to maintain multiple repositories?”

So to answer this question, we need to decide how granular we want to go in splitting the services and how many resources are available to maintain them. For instance, a single person might find it difficult to manage more than 5 services with sufficient level of productivity. Generally it is observed that a single person can effectively manage and maintain up to 3 services. It’s best to split the monolith when there are enough people to manage these microservices and it should be split in a way that it seldom changes (splitting it by sub domain or business capability). On the other hand, over splitting it causes network saturation issues.

Microservice is an important concept in data engineering. Let us now look at the nine important things about microservices.

- Testing of only a fraction of the application

We often don’t have changes in every component / module in our monolith. This is specifically advantageous for microservices architecture as you only have to update & test a fraction of your overall applications. Since we only connect via API the other applications talking to the updated application have the same experience unless you introduce a breaking change.

But microservices do take more planning, time at the time of development, and coordination when your api changes. If this doesn’t bother you, you can avail the benefits of microservices architecture without worrying about the pagers at midnight.

On the other hand, when a bunch of people work on one project, Monolith applications become hard to maintain and release as it takes a good amount of time in resolving merge conflicts. And the need to deploy the whole application for any small change in a single module might seem a bit impractical to some.

- Learning from failures

Software projects can sometimes be more complicated than we think. No matter how much care we take in filling out all the potholes, there would be some or the other scenario that can still cause issues, some edge case still left out.

So we should always be designing systems for failures. As the saying goes, a system that fails fast and recovers fast has far better scope than a system that doesn’t.

On this front, Google does exactly this with Kubernetes. With Kubernetes we can rapidly deploy and revert any changes within a matter of minutes (sometimes even seconds!!) as opposed to hours without it.It is wise to acknowledge that there would be defects that need to be fixed, learn from these defects and automate the learnings into the process. This is also aligned with the core philosophy of Agile Methodology.

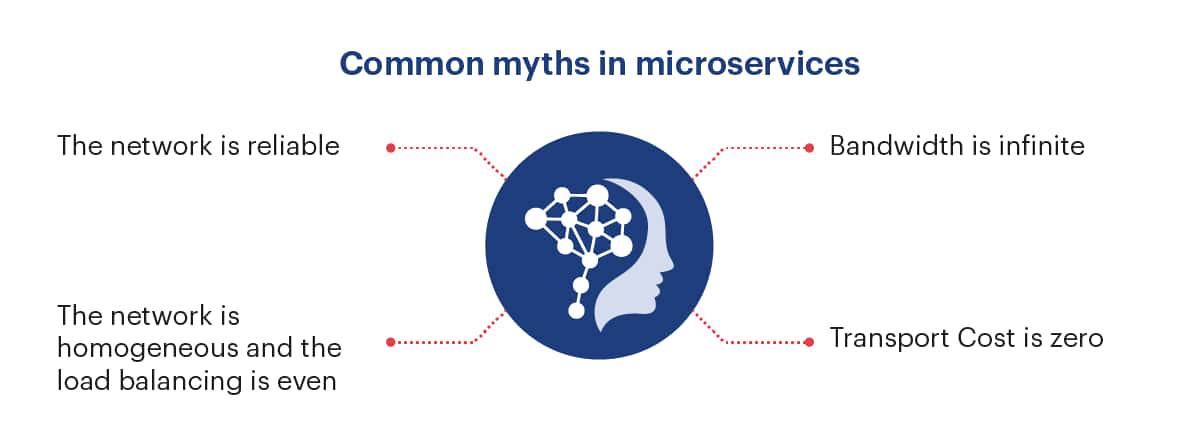

It is important to also be aware of some of the common myths

- The network is reliable.

- The network is homogeneous and the load balancing is even.

- Bandwidth is infinite.

- Transport Cost is zero.

- Blocking queries

The concept of failing fast is crucial for microservices. It doesn’t make sense to wait for an hour for a query fired from a dashboard. In addition it blocks the other queries that are in line. So therefore we configure a timeout and we fail it fast. Why does failing fast become important in microservices? Because in a monolith application, we only have one service it’s fairly simple to identify failures. In a microservice, we wouldn’t be sure which is the slow service causing the system to hang.

- Kubernetes – the saving grace for scaling applications

In an imperfect world, machines die. So, do applications that run on those machines. And these applications take the user experience with it. This can be fixed using Auto-healing.

When services are running on Kubernetes, there is no worry about such failures. But there is a catch here. For this to be possible, we need multiple instances of our service running on different machines ensuring that we have no disruption in the user experience. But not all instances can be running always.Microservice applications when on Kubernetes can scale up and down when needed. Kubernetes can be seen as the Suit that enables our application to scale up and down as the input load does. Since with Kubernetes we don’t really have the painful part of microservices (deploying multiple microservices on multiple machines) monolith has very little advantage left.

- Single point of failures

Often we have that one application / service / server that everyone depends on, be it a proxy server or an authentication service or a master node or a database. As most call this, the one thing that we all depend on is a Single Point of Failure.

So what’s with a single point of failure? As we know monolith architecture has everything in a single application and hence we deploy the whole application for every minor change. And there is risk involved every single time. And everything goes down if one part does.

Whereas in microservices, we have this risk but only with critical ones and only to a particular feature that depends on the upgraded microservice. - Microservices don’t DIY

Since each microservice is a fraction of the monolith application, it is not expected to do everything by itself. Each has a specific job and they do their best to accomplish it not worrying about other services. So when a service needs to check something related to another service, it just asks the corresponding service.

- Communication is the Key

Since a microservice doesn’t do everything by itself, it asks the corresponding service and this continues with the corresponding service until we have what we are looking for. So the communication becomes the key in microservices architecture where in monolith, the application knows everything that it requires.

In microservices and the network IO, when a lot of microservices talk to each other the network saturates and it leads to higher latencies or worse. So while designing microservices, it’s good to keep just the required number of services. Defining success with the count of microservices in place is not right.

- Every service is different in its own way

Every part of a monolith application has different needs. Some are very memory intensive, some have high processing requirements, some have increased communication needs – either to storage or within the network.

If the application has such requirements, it’s best to split them up and scale them independently as they please. And more over scaling algorithms can greatly benefit from separation of cpu intensive and memory intensive parts of the application.

And if there is one part of the monolith service that is very popular, spinning up more instances of just that could sometimes be beneficial.Some are written best in golang, some in java, some in python. When a monolithic architecture is used, there is a constraint of a single language or technology. Whereas if the monolith is split into microservices, there would no longer be any constraints of a language or technology.

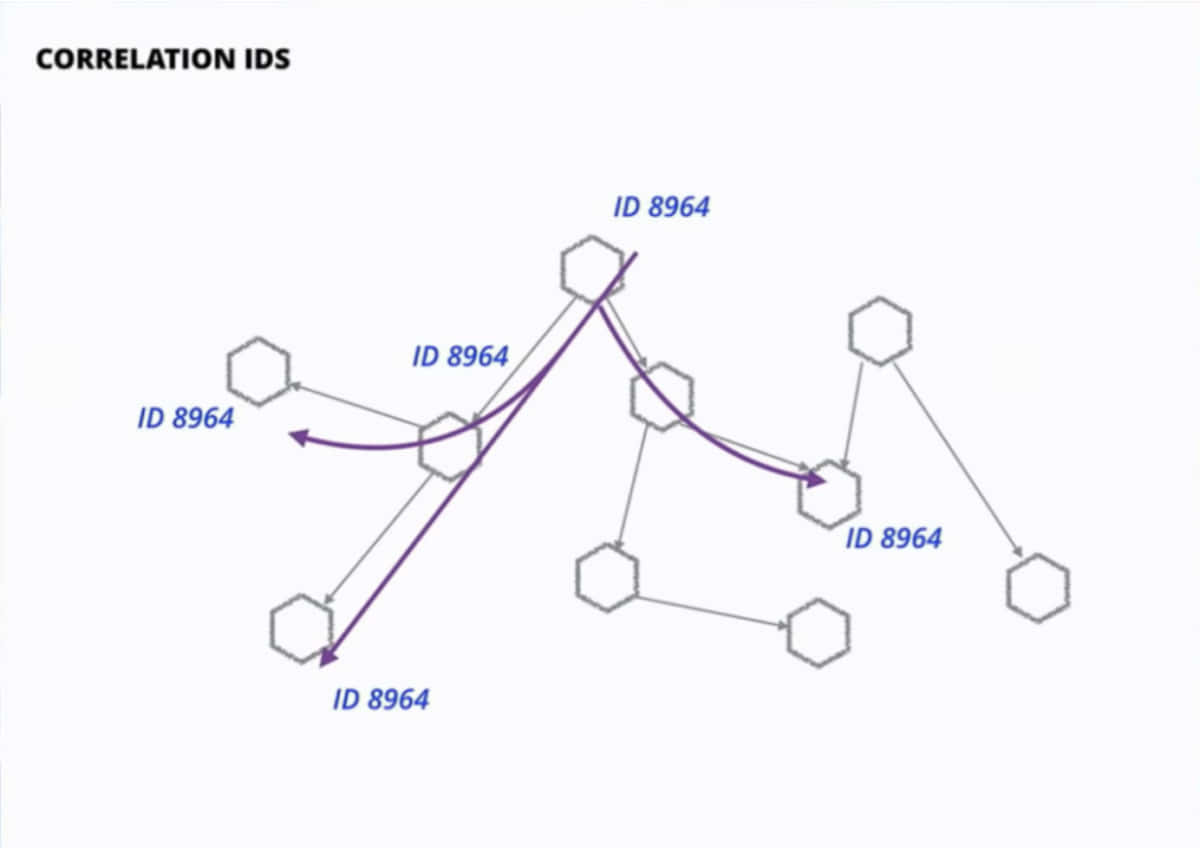

- Identifying failures?

When it comes to application and their failures, in monolithic architecture, we have a single place, a single log file to go to but in case of microservices architecture, backtracking the issue can be tiring if the right tools are not in place. It is recommended to keep an id associated with every request for this. And a log persistence service to collect all logs from Kubernetes and persist them for analysis at a later point of time.

What not to do with microservices

- Believing that a sprinkle of microservices will solve all development problems.

- Making the adoption of microservices the goal and measuring success in terms of the number of services written.

- Multiple application development teams attempt to adopt the microservice architecture without any coordination.

- Attempting to adopt the microservice architecture (an advanced technique) without (or not committing to) practicing basic software development techniques, such as clean code, good design, and automated testing.

- Focussing on technology aspects of microservices, most commonly the deployment infrastructure, and neglecting key issues, such as service decomposition.

- Intentionally creating a very fine-grained microservice architecture.

- Retaining the same development process and organization structure that were used when developing monolithic applications.

Conclusion

Of late, the tech market is buzzing with the news of major tech companies like Google, Netflix stepping from monolith to microservices architecture. Kubernetes and dockerization made microservices architecture possible like never before. If network and coordination requirements at the time of API version upgrade can be managed well, the microservices architecture offers a great deal of advantages. In scenarios where the use case is very simple or an extremely small team handling a large use case with limited time for planning, monolithic architecture can be considered.

As application and software development trends continue to evolve, the debate between using microservices or leveraging traditional monolithic architectures will only become more pronounced. In the end, developers must understand what works for their teams and their product’s specific use cases.

About the authors

Nuthan is a Data Engineer at Sigmoid who is passionate about building reliable, responsive and highly scalable end-to-end solutions by using a mix of open source technologies and cloud services.

Assisted by Shreya, Software Engineer at Sigmoid who currently works on building Query Engine and ETL pipelines on Spark, BigQuery and was involved in migration of Sigview from monolith to microservices.

Featured blogs

Subscribe to get latest insights

Talk to our experts

Get the best ROI with Sigmoid’s services in data engineering and AI

Featured blogs

Talk to our experts

Get the best ROI with Sigmoid’s services in data engineering and AI