5 challenges of scaling Machine Learning models

Reading Time: 5 minutes

Machine learning on big data has opened the door to new opportunities to achieve business goals. It facilitates better ML modeling including training and productionizing. ML model productionizing refers to hosting, scaling, and running an ML Model on top of relevant datasets. ML models in production also need to be resilient and flexible for future changes and feedback. A recent study by Forrester states that improving customer experience, profitability, and revenue growth are the key goals organizations plan to achieve specifically using ML initiatives.

Though gaining worldwide acclaim, ML modeling is hard to translate into active business gains. A plethora of engineering, data, and business concerns become bottlenecks while handling live data and putting ML models into production. As per our poll, 43% of people said they get roadblocked in ML model production and integration. It is important to understand what is scaling in machine learning and ensure that ML models deliver their end objectives as intended by businesses as their adoption across organizations globally is increasing at an unprecedented rate, thanks to robust and inexpensive open source infrastructure. In order to understand the common pitfalls in productionizing ML models, let’s dive into the top 5 scaling challenges that organizations face.

1. Complexities with Data

One would need about a million relevant records to train an ML model on top of the data. And it cannot be just any data. Data feasibility and predictability risks jump into the picture. Assessing if we have relevant data sets and do we get them fast enough to do predictions on top isn’t straightforward. Getting contextual data is also a problem. In one of Sigmoid’s ML scaling with Yum Brands, some of the company’s products like KFC (with a new royalty program) didn’t have enough customer data. Having data isn’t enough either. Most ML teams start with a non data-lake approach and train ML models on top of their traditional data warehouses. With traditional data systems, data scientists often spend 80% of their time in cleaning and managing data rather than training models. A strong governance system and data cataloging are also required so that data is shared transparently and gets cataloged well to be leveraged again. Due to the data complexity, the cost of maintaining and running an ML model relative to the return diminishes over time.

2. Engineering and Deployment

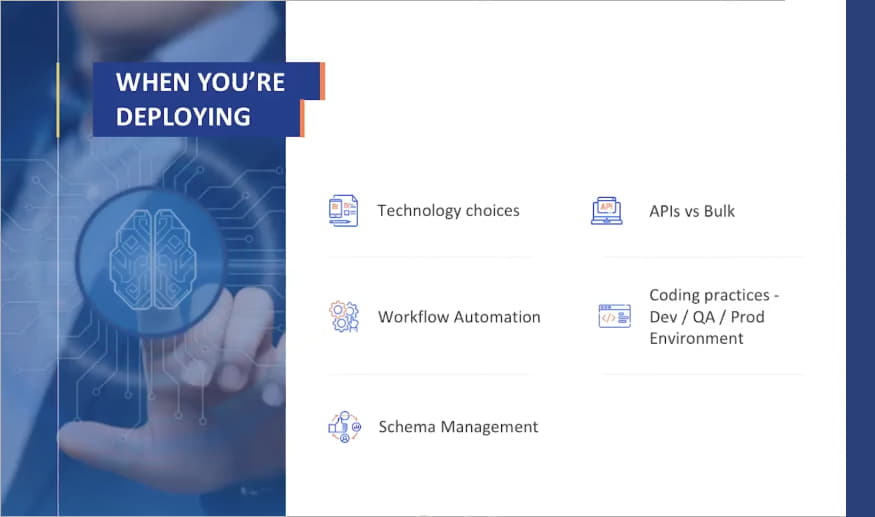

Once the data is available, the infrastructure and technical stacks have to be finalized as per the use case and future resilience. ML systems can be quite difficult to engineer. A wide breadth of technology is available in the machine learning space. Standardizing different technology stacks in different areas while choosing each one such that it wouldn’t make productionizing harder is crucial for the model’s success. For instance, Data scientists may use tools like Pandas and code in Python. But these don’t necessarily translate well to a production environment where Spark or Pyspark is more desirable. Improperly engineered technical solutions can cost quite a bit. And then the lifecycle challenges and managing and stabilizing multiple models in production can become unwieldy too.

3. Integration Risks

A scalable production environment that is well integrated with different datasets and modeling technologies is crucial for the ML model to be successful. Integrating different teams and operational systems is always challenging. Complicated codebases have to made into well-structured systems ready to be pushed into production. In the absence of a standardized process to take a model to production, the team can get stuck at any stage. Workflow automation is necessary for different teams to integrate into the workflow system and test. If the model isn’t tested at the right stage, the entire ecosystem would have to be fixed at the end. Technology stacks have to be standardized else integration could be a real nightmare. Integration is also a crucial time to make sure that the Machine Learning experimentation framework isn’t a one-time wonder. Else if the business environment changes or during a catastrophic event, the model would cease to provide value.

4. Testing and Model Sustenance

Testing machine learning models is difficult but is as important, if not more, as other steps of the production process. Understanding results, running health checks, monitoring model performance, watching out for data anomalies, and retraining the model together close the entire productionizing cycle. Even after running the tests, a proper machine learning lifecycle management tool might be needed to watch out for issues that are invisible in tests.

5. Assigning Roles and Communication

Maintaining transparent communication across data science, data engineering, DevOps, and other relevant teams is pivotal to ML models’ success. But assigning roles, giving detailed access, and monitoring for every team is complex. Strong collaboration and an overdose of communication are essential to identify risk across different areas at an early stage. Keeping data scientists deeply involved can also decide the future of the ML model.

In addition to the above challenges, unforeseen events such as the COVID-19 have to be watched out for. When the customer’s buying behaviors suddenly change, the solutions from the past cease to apply and the absence of new data to adequately train models becomes a roadblock. Scaling in ML models isn’t easy. Watch out for our next piece on the best practices to productionize ML models at scale.

Featured blogs

Subscribe to get latest insights

Talk to our experts

Get the best ROI with Sigmoid’s services in data engineering and AI

Featured blogs

Talk to our experts

Get the best ROI with Sigmoid’s services in data engineering and AI